Wall Street continues to paint Nvidia as the GPU juggernaut riding booms in gaming, crypto, and AI model training in cyclical succession. But this reductionist narrative overlooks the profound pivot in progress: Nvidia is no longer marketing high-powered chips, it is establishing the foundational platform for the AI-born internet. By launching Blackwell and the full-stack platform that surrounds it, Nvidia is constructing what can become the most defensibility-driven digital tollbooth in contemporary computing.

Growth has the consensus’s acquiescence. It fails, however, to note a key transition: from periodic GPU sales to recurring, high-margin inference monetization. Similarly, it fails to observe how sovereign states are taking on Nvidia systems not as suppliers but as indispensable AI statecraft allies. It is not a hardware cycle, it is an infrastructural, geo-economic play. Hence, the upside is only just underway.

Nvidia Is A Founder Operating System: Playbook, Not Personality

Jensen Huang is not just a vision-oriented founder, he is the system architect for sustained innovation and unrelenting implementation. In contrast to most technology companies that become bloated in their success, Nvidia has established agility across the company through an internal rhythm of coordinated launches of architecture, software refactoring, and developer onboarding. Each year, Nvidia does not merely introduce a faster chip, it delivers an entire system stack across silicon, network, software, simulation, and developer tools. It is an organizational cadence that condenses the duration between hardware innovation and end-to-end adoption.

A level of deliberate protocolization within the company is in its internal organization that will prevent decay in scale. Teams don’t merely ship features; they ship platforms with an orchestrated upgrade cycle. Software developers at the company, for example, are not isolated; hardware groups and domain experts in the industry are coordinated directly in order to optimize CUDA libraries across domains ranging from healthcare to industrial robotics. And it creates a virtuous loop: the more applications on CUDA, the less possible it is for it to be unseated.

In addition to its execution, the cultural DNA is what shines through. It’s centered on long-term bets and product clarity, not appeasing for the quarter. Most trillion-dollar companies decelerate, but Nvidia stock accelerates with the guidance of its founder-operator, who continues to lead demos, create roadmaps, and formulate strategy at an almost startup-like fervor. It is this OS, not Huang’s charisma, that amplifies into organizational antifragility. Nvidia’s internal cadence, developer flywheel, and execution culture give it a durable velocity that mimics startup agility at a trillion-dollar scale.

Source: IR Nvidia

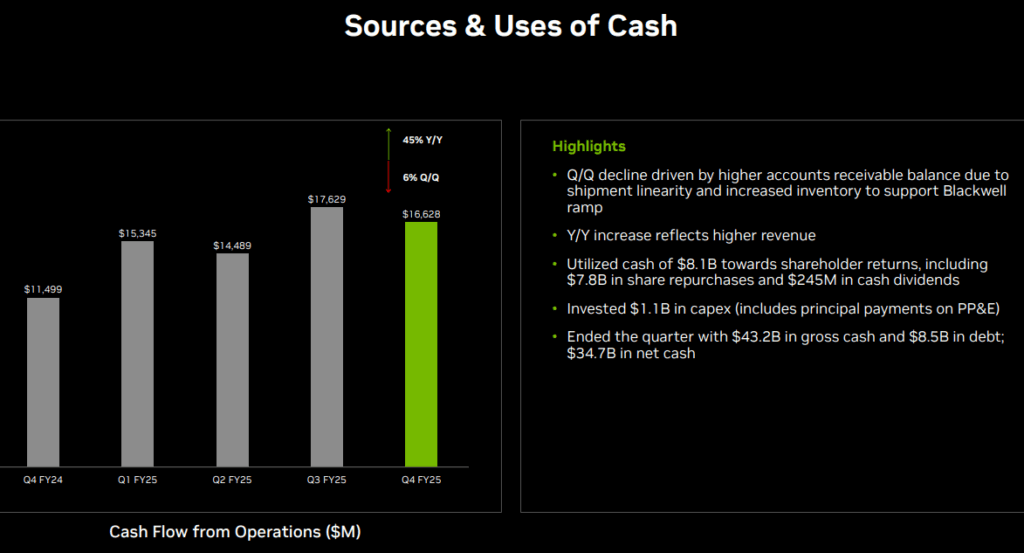

Nvidia: Cash, Code, and Capital Cycles. Most Capital-Efficient Infrastructure Play Ever

Nvidia stock’s valuation engine is elegant as it is extreme. The company’s FCF in its most recent fiscal year crossed $60 billion, a stunning number, especially combined with its capital-light business. This is not a company that constructs enormous factories or leases hyperscale real estate. It runs global supply chain orchestration while retaining the lion’s share of margin in the form of IP, software, and system designs. All this at the expense of only slightly over a billion dollars in CapEx, with CapEx as a percent of revenue less for most SaaS companies, yet with better margins for many of them

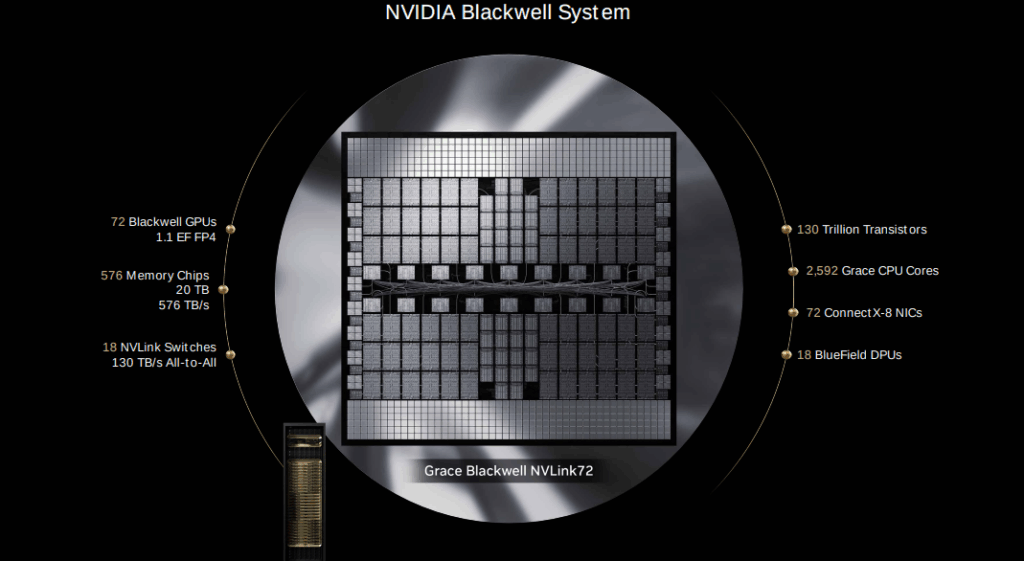

Powering this is Nvidia’s end-to-end AI value chain architectural control. Blackwell, its new platform, is not merely a GPU but an end-to-end inference engine combined with an associated software stack comprised of CUDA, TensorRT, Triton, and novel serving frameworks like Dynamo. Inference workloads now outnumber and persist in duration over those of training, and Nvidia is leveraging an increasingly persistent, monetizable AI compute phase. Models require training once but run forever.

Furthermore, Nvidia has moved away from being just a supplier to being an orchestrator of platforms. It no longer sells chips, it sells AI factories, SDKs, simulation frameworks, and even AI supercomputers for lone researchers through Project DIGITS. These are high-margin, repeat-use systems with built-in software subscription and developer dependency. And with each Blackwell deployment, Nvidia increases its base of installed systems, driving CUDA adoption deeper into enterprise workflows.

Nvidia’s Actual Flywheel

Each new software enhancement delivers improved performance on the installed base hardware, lengthens product life, enhances customer ROI, and increases gross margin. This compute cost compression fuels adoption, while increasing lock-in as well. Not many businesses are able to claim their product runs faster once deployed without any hardware upgrade. Nvidia can. Margin resiliency and reinvestment flywheel attest this is not a capital-heavy chip cycle but rather a software-infused, full-stack monetization engine.

Source: Q4-Deck

Nvidia Has Moats that Multiplied: CUDA, Context, and Containment

Classic semiconductor moats exist via process node lead, supply chain depth, as well as customer entrenchment. Nvidia has these in place, but its real moat is even more defendable: software containment. CUDA is now the effective operating system for accelerated computing. In the ten years since its inception, it has become so thoroughly entrenched across AI frameworks, academic tools, and enterprise systems that it is not just costly. It is virtually impossible to switch out of it.

Strength here is not adoption, it is velocity. Its capacity for releasing domain-specific libraries for applications as diverse as digital twins through genomics allows it to move into new verticals without distribution friction. The minute that there is new AI use case relevance, Nvidia has the stack in place ready to go. And since CUDA is both system-vendor-owned and highly optimized for its GPUs, the company reaps the full-stack economics, hardware, software, and services.

Most Recent Growth Is In Context Windowing And Token-Scale Optimization.

Blackwell GPUs are for FP4 precision and optimized for intense workloads in contexts such as retrieval-augmented generation (RAG), agentic AI, and multimodal reasoning. It’s not just about computing, it’s about latency-sensitive, memory-focused infrastructure that few can do at scale. Nvidia’s lead here is amplified by its NVLink interconnect, which builds all-to-all GPU communications within clusters, which is critical for contemporary LLM inference.

In addition, new tools such as Cosmos and Isaac GR00T open up defendable ground in physical AI. These CUDA-accelerated SDKs provide lock-in across robotics, AVs, and simulation-intensive commercial applications. What you get is a platform on which use drives increasing dependency, but not just spending. The more users build on CUDA, the more they commit to Nvidia’s roadmap, the less likely it is that they can unwind. Nvidia’s defensibility increases with the use of its CUDA flywheel, full-stack inference stack, and developer containment approach, widening the moat with each additional deployment.

Source: GTC 2025

The Inflection Point That No One Sees: Inference, Sovereign AI, and Token Economics

Much of the enthusiasm about Nvidia is about training big AI models, but the true unlock is inference, the moment when models are for real-world use. While training is done just once for each model, inference is an ongoing workload, repeated, growing in scale based on user interaction and increasing use cases. Nvidia has acknowledged this and designed its latest Blackwell architecture for inference first, a departure from what came before.

Not only is inference more persistent but it is also monetizable. Blackwell’s FP4 optimization combined with capabilities such as Dynamo allows for disaggregated serving and token processing within the context. This allows customers to deploy high-context models at scale with improved performance. But this is all at reduced power consumption, making GPUs persistent revenue engines. It is no longer measurable in terms of flops, it’s measurable in terms of tokens, responses, and downstream productivity increases.

In Tandem, There Is A New Demand Vector: Sovereign AI

Governments are no longer satisfied leaving their AI ambitions in the hands of foreign hyperscalers. Instead, they are constructing AI factories at a national scale, purpose-designed data centers built with local infrastructure, powered with local data, and based in local jurisdictions. And Nvidia is the sole supplier with a turnkey proposition. Everything from GPUs and NVLink switches, right through to software stacks, training designs, and inference frameworks.

This is not theoretical. Countries in Europe, Asia, and the Americas are already making deals on Nvidia sovereign stacks. These are multi-year deals, politically secure, high-margin deals. As the geopolitical value of AI infrastructure increases, Nvidia stock is no longer simply representing a technology provider, but a national strategic ally.

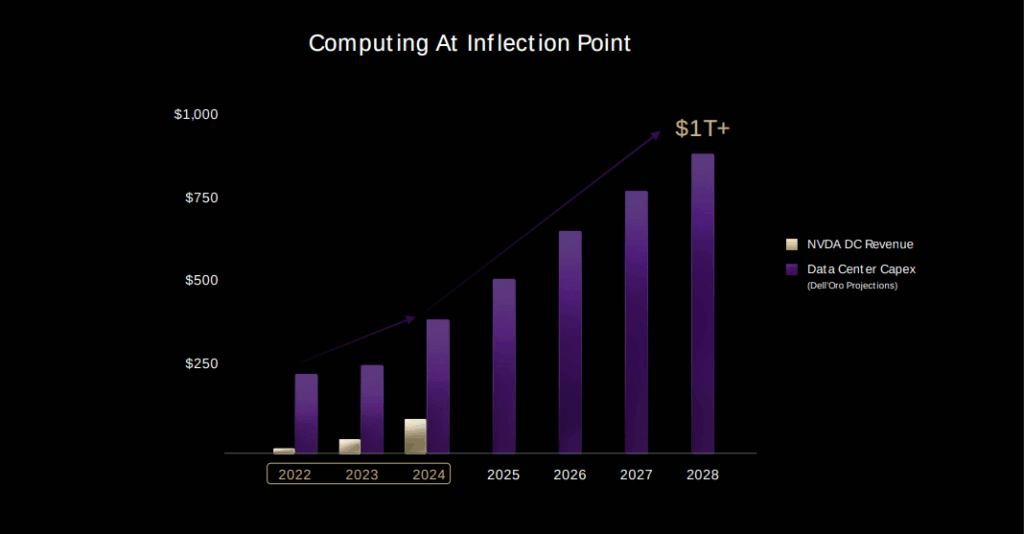

This double inflection, monetization of inferences and AI adoption at the sovereignty level, gives rise to an underplayed structural demand profile in consensus models. Wall Street continues to model Nvidia as a capex-intensive, hyperscaler-provided supplier. It is actually now harvesting rent on inference workloads and institutionalizing demand at the country level. Inference has not been priced in as the leading AI workload or sovereign AI as a multi-decade infrastructure segment, Nvidia leads in both.

Source: GTC 2025

Nvidia Stock’s Valuation Disparities and Virality Triggers: Is Perfection Priced In?

Nvidia’s stock high valuation has been the source of ongoing controversy. Priced at 35x forward earnings, perfection may be priced in by skeptics. However, this description overlooks the multi-dimensional growth of Nvidia. In contrast to SaaS businesses, with growth in ARR slowing down or consumer technology with markets becoming saturated, Nvidia’s TAM is growing in multiple dimensions, including cloud, sovereign, industrial, and personal AI computing.

Critically, the bulk of Nvidia’s future product pipeline, Rubin, Blackwell Ultra, and Vera Rubin, is not captured in sell-side models. Ahead are earnings power in sovereign AI contracts, AI Factory licensing, and robotics with simulation-powered economics. Missing is the compounding impact of economics at the token scale. As inference becomes the primary AI workload, Nvidia’s GPUs are increasingly attached to recurring usage, like higher-margin cloud software.

Retail viral momentum is taking hold as well. Recent news on Project DIGITS, a $3,000 personal AI supercomputer, grabbed enormous social platform attention. So, have robotics demonstrations of Cosmos, as well as workflows of agentic AI at NeMo. These are not product launches, they are narrative drivers that redefine public and investor sentiment. Memetification of Nvidia’s platforms, like Tesla’s breakout in 2019, can be a second-order rerating trigger.

The market can one day change its valuation prism, from cyclical chip producer to monopolist of embedded infrastructure. If it does, the multiples may widen, not contract. And if AI demand in the sovereign environment picks up at an accelerated rate, the upside is nonlinear. Valuation is high today, but not outlandish if you think of Nvidia as a platform taking rent payments for the next $1T in AI spending on infrastructure.

Source: Ycharts

Conclusion: Token Printer of the AI Economy

Nvidia stock no longer represents just a graphics processing unit company. It is increasingly the underlying infrastructure foundation for the age of AI. From agentic AI and sovereign compute to simulation-driven robotics and token-scale inference, Nvidia is investing in the digital economy at a level no other company is doing.

Its platform breadth, software flywheel, and architectural cadence position it as one of the few companies that can compound at scale across trillion dollars. KPIs worth monitoring are not EPS and gross margins but tokens per watt, scaling in context, sovereign deployments, and CUDA developer growth. If adoption in Blackwell keeps at this rate, and Rubin launches on target, Nvidia stock’s re-rating from cyclical chip vendor to structural monopoly of infrastructure can be fast and dramatic.

Disclosure:

Yiannis Zourmpanos has a beneficial long position in the shares of NVDA either through stock ownership, options, or other derivatives.